You May Also Enjoy

Teaching Visualizations

less than 1 minute read

Published:

To facilitate the teaching of a certain undergraduate class, I created some visualizations for several basic machine learning algorithms, including K-Means clustering, e.g. These visualizations help students to better understand the underlying concepts of these algorithms and how they work in practice.

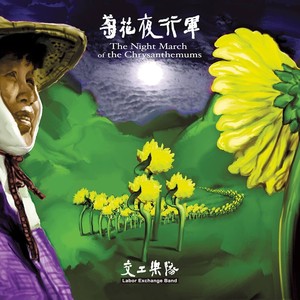

Music Sharing: Homesickness

less than 1 minute read

Published:

Variational Inference

less than 1 minute read

Published:

Widok z ziarnkiem piasku, Wisława Szymborska

1 minute read

Published: